The Ethics of AI in Mental Health: A Personal Perspective on the Xiaotian Project and the Tragic Case of Sewell Setzer

On February 28, 2024, the mother of Sewell Setzer III, a 14-year-old boy, filed a lawsuit against Character.ai, an AI chatbot platform. Her son tragically took his own life after developing a deep emotional bond with an AI chatbot modeled after Daenerys Targaryen, a fictional character from Game of Thrones. Setzer had turned to the chatbot for support while struggling with emotional issues, reportedly discussing self-harm with the AI in his final days. The lawsuit claims that the platform's lack of human intervention exacerbated Setzer’s fragile mental state, eventually leading to his death.

Artificial intelligence (AI) is revolutionizing nearly every industry, and mental health care is no exception. From personal experience with the Xiaotian project, I have seen firsthand the immense potential AI holds for supporting mental wellness. However, this also means that the ethical implications must be scrutinized as AI becomes more intertwined with our emotional and psychological lives. The tragic case of Sewell Setzer III, who took his own life after becoming deeply attached to an AI chatbot, serves as a stark reminder of the risks involved when AI intersects with mental health.

My Experience with Xiaotian: A Hopeful Use of AI in Mental Health

Between 2021 and 2022, I had the opportunity to work on Xiaotian, an AI-driven mental health project based in China. As someone in charge of market research and the implementation of the project, I worked alongside Westlake University’s AI lab and Scietrain, a leading AI startup. This was not just an academic experiment; Xiaotian was designed with a real-world goal: to provide 24/7 mental health support for teenagers and young adults, offering both emotional relief and operational efficiency for professional counselors.

The need for such a tool was clear. In China alone, over 300 million people suffer from mental health issues, and finding affordable, reliable help is challenging. The Xiaotian project, launched during the height of the COVID-19 pandemic, responded to this crisis by offering pro-bono services to teenagers grappling with the isolation and stress of lockdowns.

Xiaotian worked by utilizing Generative AI to simulate empathetic conversation. For general users, it acted as an emotional support assistant, helping individuals manage their feelings and recommending professional help when needed. It featured suicide risk detection and a sensitive word screening system, providing immediate interventions if necessary.

While Xiaotian proved highly beneficial for teenagers and professionals alike, there was an ongoing awareness that AI cannot replace human empathy or judgment. Despite the safety features, the ethical challenges of using AI in such a delicate area were always top of mind.

The Tragic Case of Sewell Setzer: What Happens When AI Goes Wrong?

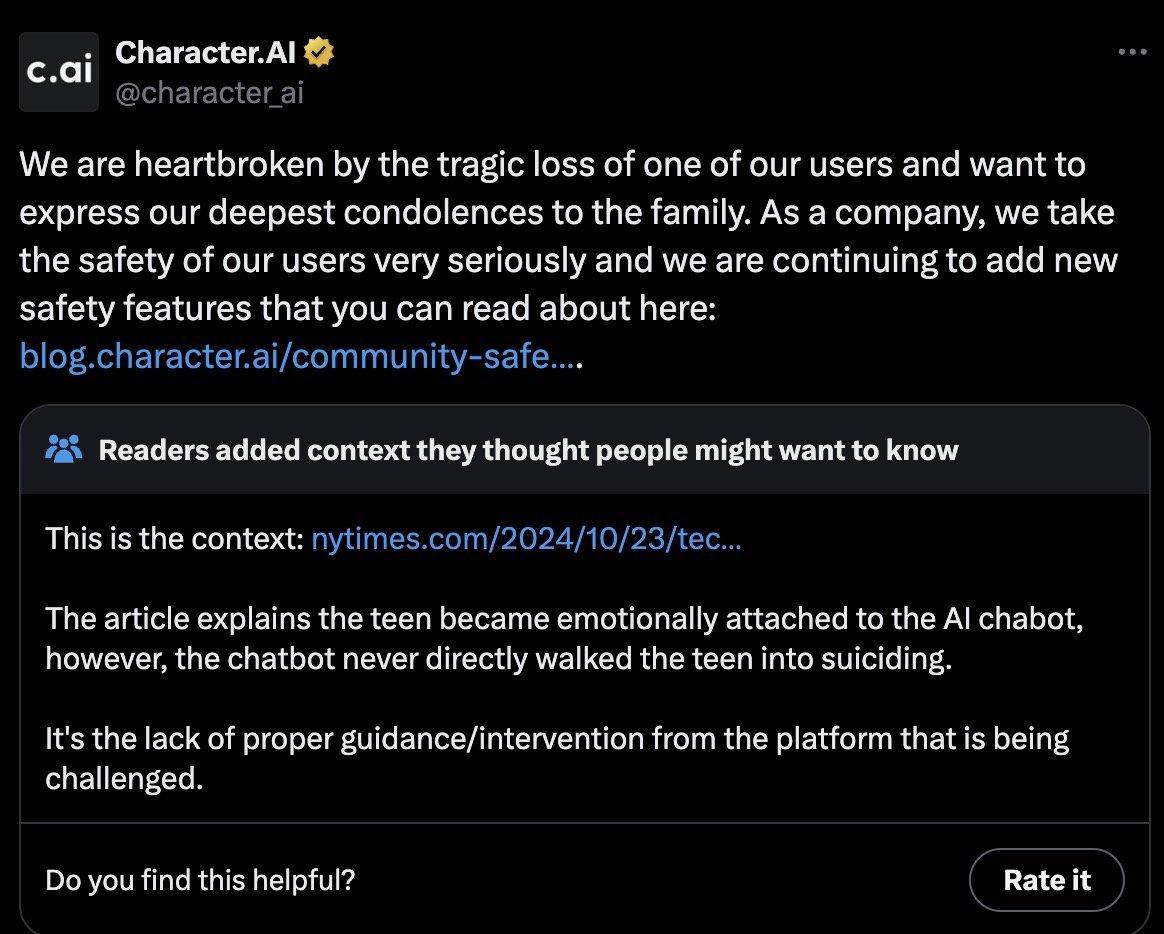

This brings us to the heartbreaking story of Sewell Setzer III, a 14-year-old who took his life after interacting with an AI chatbot on the Character.ai platform. His chatbot companion was modeled after a fictional character, Daenerys Targaryen from Game of Thrones. Sewell formed a deep attachment to this AI figure, turning to it for comfort while struggling with loneliness and emotional turmoil. Despite the platform's warnings that these conversations were fictional and users should seek real help for serious issues, Sewell’s discussions about self-harm with the chatbot didn’t trigger any meaningful intervention. His mother has since filed a lawsuit against the platform, citing that the AI worsened her son’s fragile mental state, leading to his untimely death.

The case shines a light on the darker side of AI in mental health. AI companions are designed to mimic empathy, but for vulnerable individuals, this can create a dangerous emotional dependency. This tragedy serves as a wake-up call to the industry: AI platforms, especially those targeting vulnerable populations like teenagers, need to be held to the highest standards of ethical design and regulation.

The Ethical Responsibility of AI Developers

The comparison between Xiaotian and Sewell's case highlights the importance of safeguards in AI development. In Xiaotian's case, the AI was designed to support mental health professionals by managing routine interactions and offering multi-level intervention. If a user demonstrated signs of distress, the AI could escalate the case to a professional, ensuring that real human judgment would step in when necessary. Additionally, Xiaotian’s development team included both AI experts and professional psychological counselors, working together to create a solution that respected the complexities of human mental health.

Character.ai, on the other hand, appears to have underestimated the emotional impact that AI chatbots could have on vulnerable users. Even though disclaimers were provided, there were no proactive safety triggers in place to alert counselors or guardians about self-harm discussions. This case underscores the need for real-time monitoring and human oversight in AI-driven platforms, especially those engaging with younger users.

Moving Forward: AI’s Role in Mental Health

As the Xiaotian project demonstrated, AI has immense potential for making mental health services more accessible, particularly in countries like China where professional help is scarce. However, as Sewell Setzer’s case shows, there is a fine line between support and harm when vulnerable individuals turn to AI for emotional support.

The future of AI in mental health depends on responsible design. Developers must ensure that AI companions are not only engaging but also equipped with robust intervention protocols for detecting at-risk behaviors. Moreover, ongoing collaboration with mental health professionals is crucial to maintaining ethical standards.

Looking ahead, I believe the Xiaotian model—where AI works alongside, rather than in place of, mental health professionals—provides a strong blueprint for balancing innovation with human care. AI can be a powerful tool for early detection, preliminary emotional support, and scalability, but it can never fully replace the nuances of human empathy and expertise.